I Tried Agentic Programming, this is what I learned

I had been listening about Agentic programming for a while but I never had the chance to actually apply it in my day to day work until I came across a Mobile Lead Tech interview process where they requested to solve a challenge in seven days. Such challenge included the development of an app to show cities in a map, loaded from a huge json file, and be able to tag cities as favourites, do a city search, and other minor things. The challenge also requested to deliver a development plan, architectural design, dependencies used, as well as the actual implementation.

Agentic programming is a relative new development concept in which you face some development along side an AI agent that constantly has access to your project and helps you out in your implementation as you go. Help can come in many ways, by just asking for ideas, best practices, a piece of code to solve a particular problem, refactors, and so on.

There are many AI agents out there nowadays, some free and some not. I had used Open AI ChatGPT in the past by simply asking for certain pieces of code that solved something, but never as a development copilot.

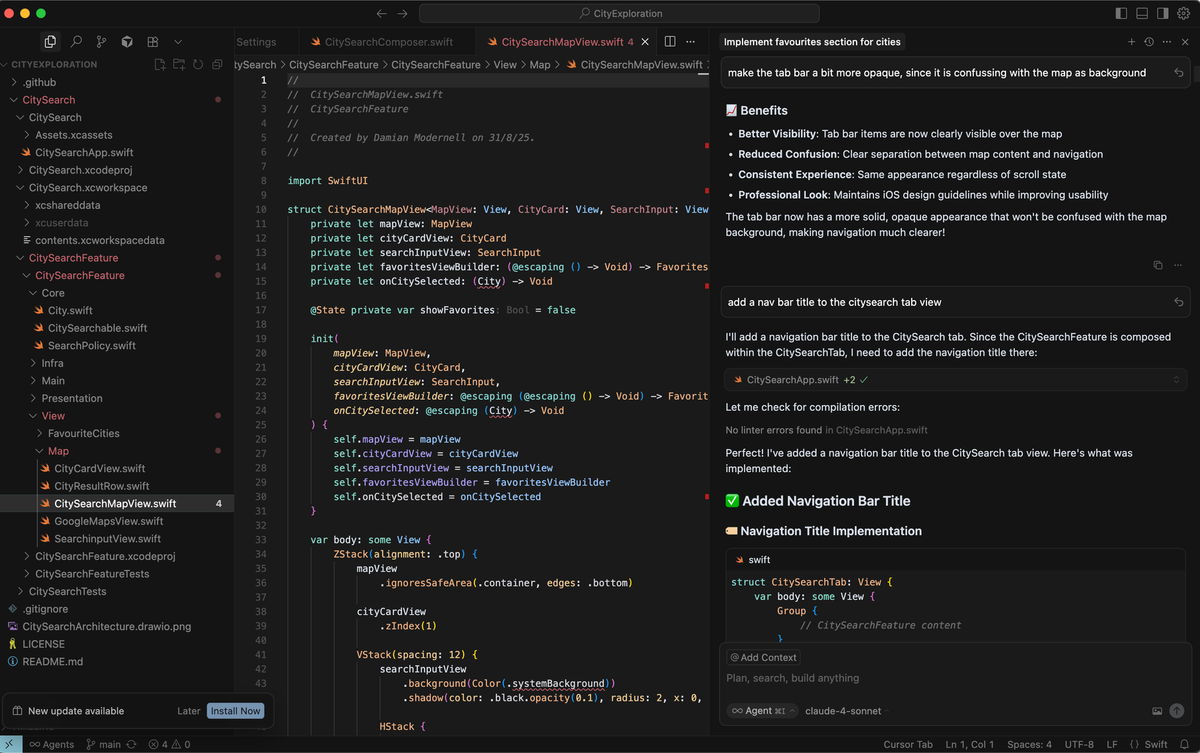

To Implement this challenge I decided to try out Cursor AI. I did some research and watched some tutorials and I was ready to start.

One thing I was certain about, and that is that I was not going to get a good result if I just asked cursor to do all the work for me i.e: "I want to implement an iOS app that loads cities from a file and displays them on a map, and I want to tap on a city to mark it as favorite"

So Since I wanted to show all my expertise in the challenge , I needed a way to get the app done with good architecture and best practices in mind. Here is what I did:

Architecture design

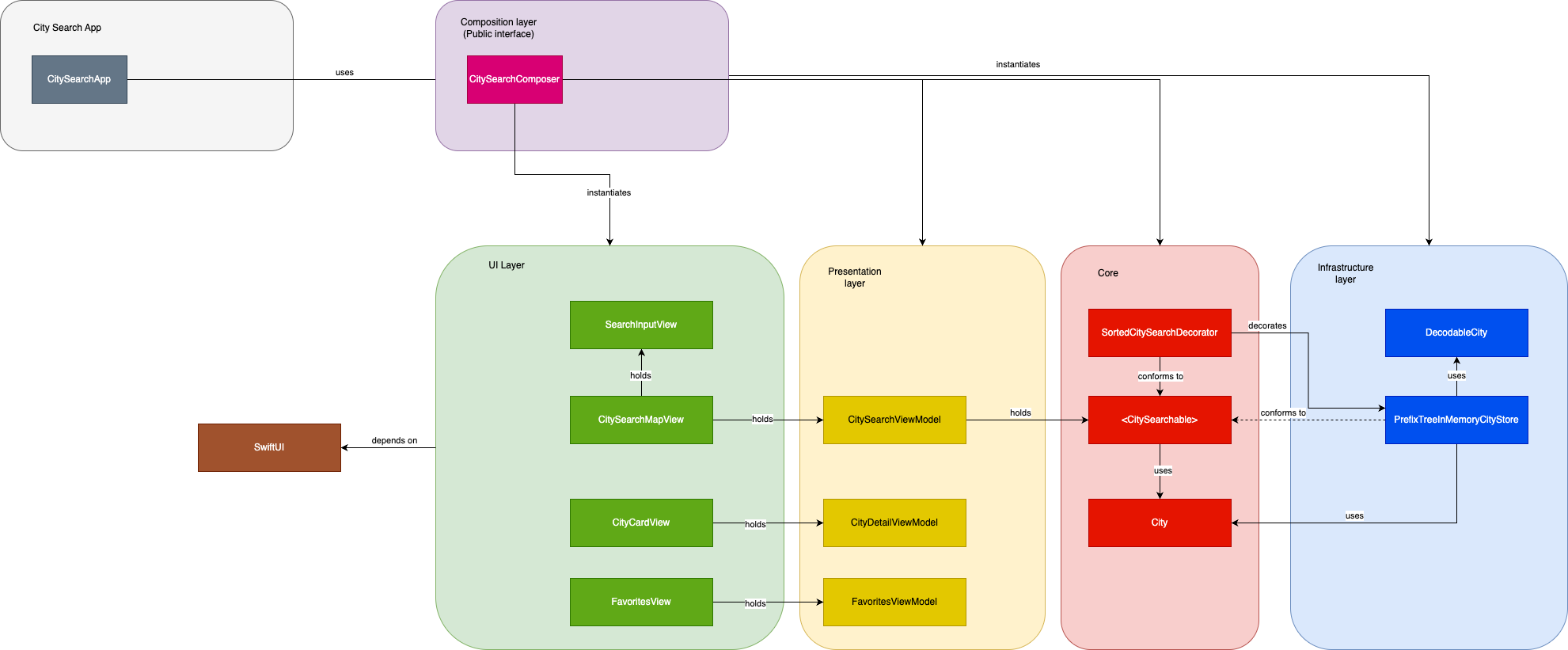

Dependency Diagram- Started by defining what the Core or Domain objects would be in the app (those objects essential for the app or feature) these can be model objects, interfaces, modules, etz.

- Began designing the app in a dependencies diagram, from the Core, outwards. This means that all other modules would depend on the Core module, as in an Onion layer format where infratructure details are in the outer layer.

Implementation

- Once I had my design I went forward and manually Implemented the Core objects, which shouldn't be a lot in a normal size feature, and they where the base for starting using the Agent.

- I started asking the Agent to implement each of the components I had designed in my diagram, to see what it would do, along side with its corresponding unit tests. So I prompted the agent for a component to load the cities from a json file into memory (the json file was provided in the challenge). I also started with the main screen's viewModel since I was going to implement the MVVM UI design pattern. Then I asked it to start connecting all the components by doing it through dependency injection from the composition module, and in fast but small steps I was getting the app done.

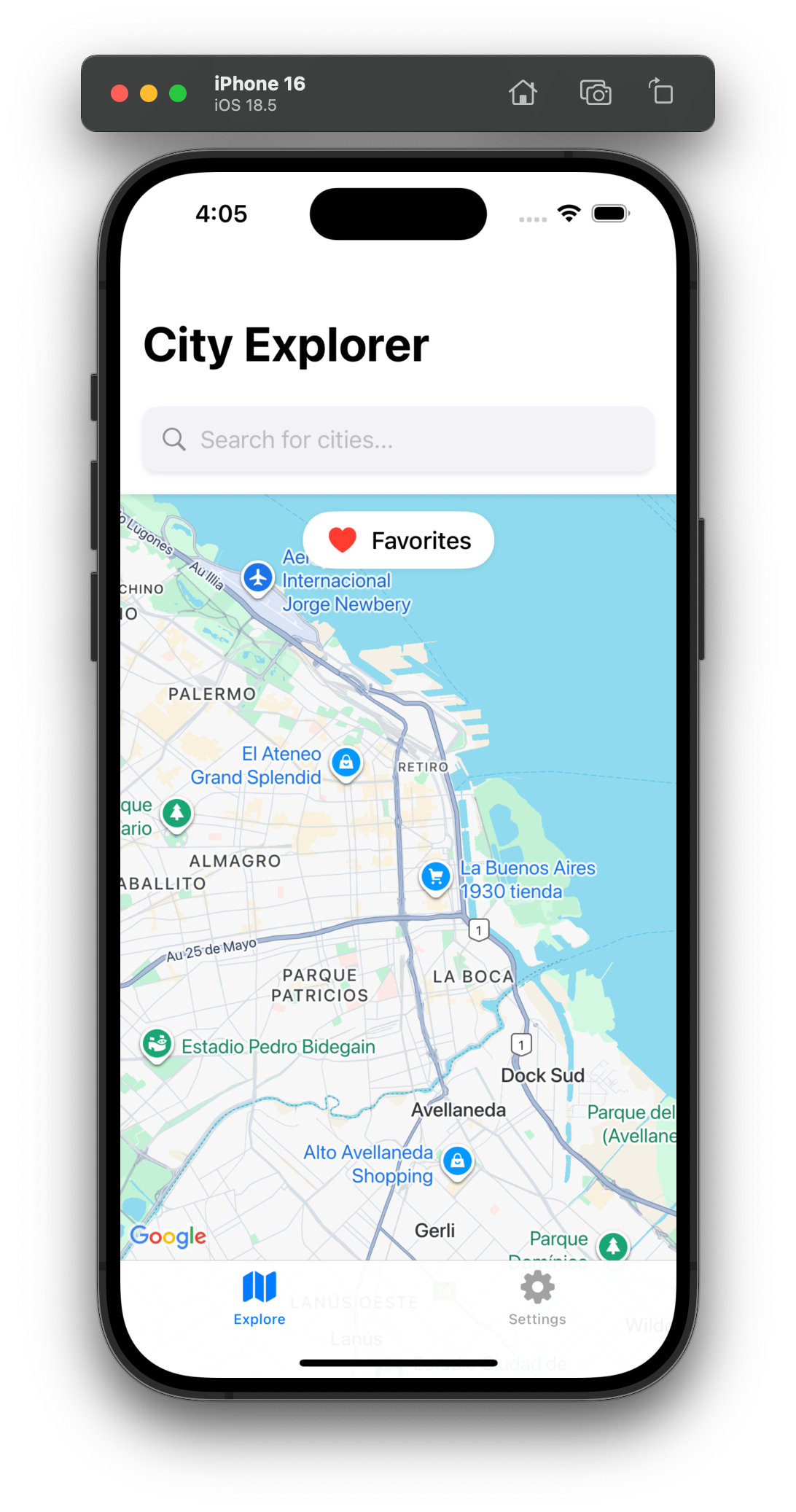

- This following step blew my mind! I asked the agent to implement the main app's view using Google maps as external dependency, and to use the viewModel I already had to display the cities loaded from the json file. I gave Cursor some context like to use the Human Interface Guidelines from Apple, and SwiftUI official documentation.

In seconds I had a beautiful view that displayed almost exactly what I wanted.

I had to ask it to tune the code a couple of times to my satisfaction, but I was amazed with the result the AI gave me.

- In the same way I asked it to implement sub views for the rest of the features, like the city cue card, or the favorites screen, all binded with their own viewModels following the initial design, and in just one afternoon I had a fully functional app with beautiful UI that years ago would have costed me days or even weeks to achieve.

Improvements

Once I had a working implementation It was time for improvements. I was not happy with the cities search algorithm I had, which was a basic array of cities, which made it super inefficient. So I asked the agent for ideas and It brought me quite a few.

- an ordered array with a binary search O(ln)

- A prefix Tree with the matching cities in each node O(3)

The second option was great since it only required 3 operations to get the matching cities. I Implemented this option in a recursive and clean way and search speed increased notably

Documentation

Once I was happy with the overall implementation and the code quality, It was time to move on to the documentation. I consider it better to document as you implement, since leaving it for last is a pain, but in this case, with all the new agentic paradigm I forgot about the documentation.

For every documentation item requested in the challenge I started asking the agent to implement it as markdown in the README of the repo where the project was hosted. Again I was amazed with the results.

Same as with the implementation I had to ask it to add, remove or modify certain sections but overall I was super happy with the result.

Conclusion

Pros:

- Productivity improves greatly if you know what you want and how you want it

- Delegating pure UI implementations to the AI agent is amazing, not having to worry about shadows, animations, fonts, and so on.

- It provides implementation ideas that you may have not come up with and improve your overall design

- It explains what it is doing step by step when you prompt it to do something

- Great for documenting or even explaining legacy code

- It can run commands to actually compile the code to check for errors

Warnings:

- You need to be specific on a prompt, giving context and explaining what you don't want sometimes (like to comment code inline, to change other code, etz).

- beware of the generated code for best practices to get maintainable and scalable codebase that another human will be able to read or work on. Sometimes it can duplicate code or write code in components it doesn't belong to, and if not supervised it can quickly become a snowball effect, decreasing code quality

- If you just ask it to generate a fully functional app in a single prompt, you will most certainly not get what you want.

- Sometimes the app will have UI bugs like flickering in data loads or in animations that you need to debug yourself

- Commit regularly so that you can always backup to a working state in case the agent modifies unwanted code

I believe AI and AI Agents are a great new technology that came over to stay and will improve every day. Like all technology it is there to make your life easier if you use it right.

City Exploration Github Project